![About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy & Safety How YouTube works Test new features Press Copyright Contact us Creators ... Imaginary Numbers Are Real [Part 1: Introduction] - YouTube](https://i.ytimg.com/vi/3d6DsjIBzJ4/default.jpg)

![About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy & Safety How YouTube works Test new features Press Copyright Contact us Creators ... Imaginary Numbers Are Real [Part 1: Introduction] - YouTube](https://i.ytimg.com/vi/3d6DsjIBzJ4/default.jpg)

13. Conjugate Gradients on the Normal Equations 41 14. The Nonlinear Conjugate Gradient Method 42 14.1. Outline of the Nonlinear Conjugate Gradient Method 42 14.2. General Line Search 43 14.3. Preconditioning 47 A Notes 48 B Canned Algorithms 49 B1. Steepest Descent 49 B2. Conjugate Gradients 50 B3. Preconditioned Conjugate Gradients 51 i Apply the conjugate gradient method to this problem from $\mathbf{x_0=0}$ and show that it converges in two iterations. Verify that there are just two independent vectors in the sequence $\mathbf{g_0,Gg_0,G^2g_0,\dots}$. The algorithm I am going by is the following: Nonlinear Conjugate Gradient Methods. 21 Principal Axis Method. 23 Methods for Solving Nonlinear Equations Introduction. 25 Newton’s Method. 25 The Secant Method. 28 Brent’s Method. 29 Step Control Introduction. 31 Line Search Methods. 32 Trust Region Methods. 39 Setting Up Optimization Problems in Mathematica Specifying Derivatives. 44 Stack Exchange network consists of 176 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.. Visit Stack Exchange Div [{f 1, f 2, …, f n}, {x 1, x 2, …, x n}] is the trace of the gradient of f: Compute Div in a Euclidean coordinate chart c by transforming to and then back from Cartesian coordinates: The result is the same as directly computing Div [ f , { x 1 , … , x n } , c ] : 13. Conjugate Gradients on the Normal Equations 41 14. The Nonlinear Conjugate Gradient Method 42 14.1. Outline of the Nonlinear Conjugate Gradient Method 42 14.2. General Line Search 43 14.3. Preconditioning 47 A Notes 48 B Canned Algorithms 49 B1. Steepest Descent 49 B2. Conjugate Gradients 50 B3. Preconditioned Conjugate Gradients 51 i The conjugate gradient method is a mathematical technique that can be useful for the optimization of both linear and non-linear systems. This technique is generally used as an iterative algorithm, however, it can be used as a direct method, and it will produce a numerical solution. Conjugate gradient descent¶. The gradient descent algorithms above are toys not to be used on real problems. As can be seen from the above experiments, one of the problems of the simple gradient descent algorithms, is that it tends to oscillate across a valley, each time following the direction of the gradient, that makes it cross the valley. Conjugate Gradient Method. The conjugate gradient method is an algorithm for finding the nearest local minimum of a function of variables which presupposes that the gradient of the function can be computed. It uses conjugate directions instead of the local gradient for going downhill. If the vicinity of the minimum has the shape of a long, narrow valley, the minimum is reached in far fewer ... Mathematica; siesta-project / flos Star 4 Code Issues Pull requests Lua ... conjugate gradient algorithm, with improved efficiency using C++ Armadillo linear algebra library, and flexibility for user-specified preconditioning method. optimization conjugate-gradient preconditioner

[index] [7010] [2233] [9530] [8502] [3478] [8788] [1567] [8946] [3982] [7367]

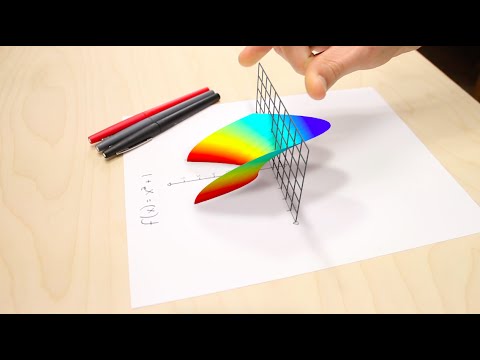

Numerical Optimization by Dr. Shirish K. Shevade, Department of Computer Science and Engineering, IISc Bangalore. For more details on NPTEL visit http://npte... Taylor polynomials are incredibly powerful for approximations, and Taylor series can give new ways to express functions.Brought to you by you: http://3b1b.co... By Ahmed Abu-Hajar, Ph.D. This is a Bode plot example to help my students in the Linear Controls course. I decided to share it with you on youtube. For early access to new videos and other perks: https://www.patreon.com/welchlabsWant to learn more or teach this series? Check out the Imaginary Numbers are... Thanks to all of you who support me on Patreon. You da real mvps! $1 per month helps!! :) https://www.patreon.com/patrickjmt !! Calculating a Double Integ... Visualization explaining imaginary numbers and functions of complex variables. Includes exponentials (Euler’s Formula) and the sine and cosine of complex nu... Thanks to all of you who support me on Patreon. You da real mvps! $1 per month helps!! :) https://www.patreon.com/patrickjmt !! Buy my book!: '1001 Calcul... An animated introduction to the Fourier Transform.Home page: https://www.3blue1brown.com/Brought to you by you: http://3b1b.co/fourier-thanksFollow-on video ... About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy & Safety How YouTube works Test new features Press Copyright Contact us Creators ... A short tutorial on finding the argument of complex numbers, using an argand diagram to explain the meaning of an argument. Keep updated with all examination...

Copyright © 2024 ms.sitekingbet90.site